1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

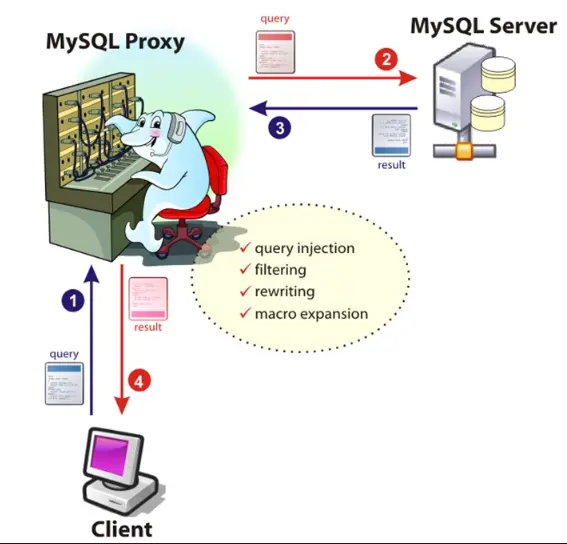

| 主库宕机处理过程

1. 监控节点 (通过配置文件获取所有节点信息)

系统,网络,SSH连接性

主从状态,重点是主库

2. 选主

(1) 如果判断从库(position或者GTID),数据有差异,最接近于Master的slave,成为备选主

(2) 如果判断从库(position或者GTID),数据一致,按照配置文件顺序,选主.

(3) 如果设定有权重(candidate_master=1),按照权重强制指定备选主.

1. 默认情况下如果一个slave落后master 100M的relay logs的话,即使有权重,也会失效.

2. 如果check_repl_delay=0的化,即使落后很多日志,也强制选择其为备选主

3. 数据补偿

(1) 当SSH能连接,从库对比主库GTID 或者position号,立即将二进制日志保存至各个从节点并且应用(save_binary_logs )

(2) 当SSH不能连接, 对比从库之间的relaylog的差异(apply_diff_relay_logs)

4. Failover

将备选主进行身份切换,对外提供服务

其余从库和新主库确认新的主从关系

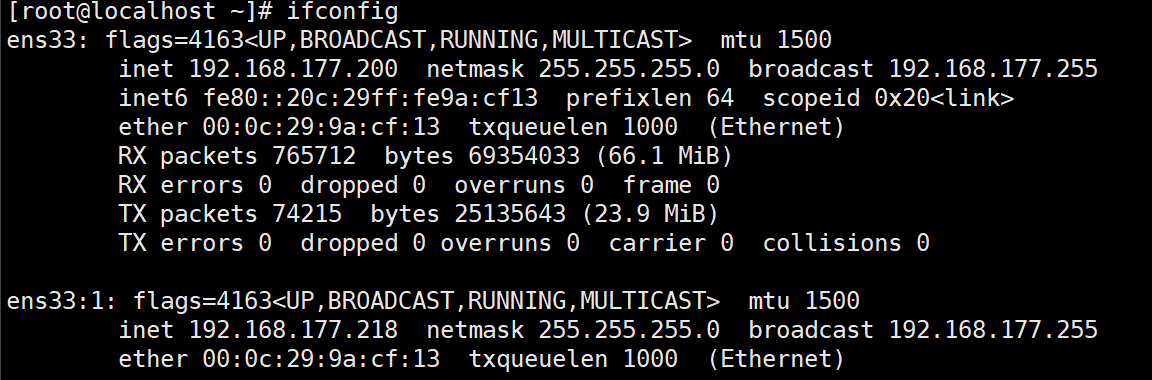

5. 应用透明(VIP)

MHA自带

6. 故障切换通知(send_reprt)

7. 二次数据补偿(binlog_server)

|